While AI was already in use in diverse business sectors, it was the release of ChatGPT in November 2022 that really opened the floodgate of AI to our world. And the global AI market is expected to be worth USD 1,811.8 billion by 2030.

That says something serious as businesses are adopting AI to their operations actively and more than ever. And AI will continue to influence business even more. But the challenge is that no one really understands how AI works. How does it create perfect, sensible paragraphs, images, videos, etc.?

As such, the talks of accountability and privacy issues are already happening in the field. This is where the development of explainable AI becomes more important. And we are talking about this post.

What’s Explainable AI?

Right now, when AI performs any task, the user does not understand why AI did what it did. It does not give any logical explanation to the answers it gives.

This is the reason for many in the industry to question the AI’s output, as we do not know if it violates any ethics or privacy when it creates the answer or result. Explainable AI solves this conundrum.

It is designed to describe the purpose, logic, and reasoning behind its results. And these explanations are easily understandable by a non-tech person, as well.

The Economic Potential of AI

One of the biggest ways generative AI can influence businesses is its ability to improve productivity.

In a report published by McKinsey, they say that generative AI can add value that’s worth anywhere between USD 2.6 trillion and USD 4.4 trillion. These figures are massive and can change the landscape of any business sector.

According to the Washington Post, over 11,000 businesses have taken advantage of the Cloud AI capabilities offered by Microsoft in conjunction with OpenAI.

These are just two instances of how AI is gradually gripping firms into the world of business and how it is done. This is also why explainable AI becomes even more important and can add further benefits to businesses. Explainable AI can make it easier for businesses to adopt AI without worrying about the ethical and accountability implications of using AI for better productivity.

What Makes Explainable AI Important?

Along with the current AI tools available in the market, businesses are investing millions of dollars to develop new AI models. And they are also using artificial intelligence in development to speed up the process.

When using AI in development, the implications of ethicality and accountability are extremely important.

Here are a few things to think about.

When AI makes decisions that are unexplainable, it leads to several social implications. These decisions can be anything.

For example, an AI can deny a person entry to a certain college/university without providing a logical explanation for it. And we will go ahead with it without analyzing the reason for it.

Hence, transparency becomes a challenge in the case of using AI in major decision-making processes. We need to trust AI blindly and believe whatever results it can generate is the best.

Which, we think, is not the ideal situation.

A real-world incident can be seen in the case of the tragic Uber incident in 2018. The self-driving car from Uber misinterpreted its environment and resulted in a fatal accident.

There are several examples of these issues in the world, which further calls for explainable AI. This will help us not only understand where we are going wrong but also how these issues can be resolved.

Related Article: How ChatGPT is Revolutionizing Industry

What Are the Benefits of Explainable AI?

AI has taken the world by storm. There is no doubt about it. And many businesses are already using artificial intelligence in development projects to speed up their processes.

The adoption of explainable AI is more than just a business decision to understand the logic behind AI reasoning.

It is much more important than that. Here are the benefits of developing explainable AI.

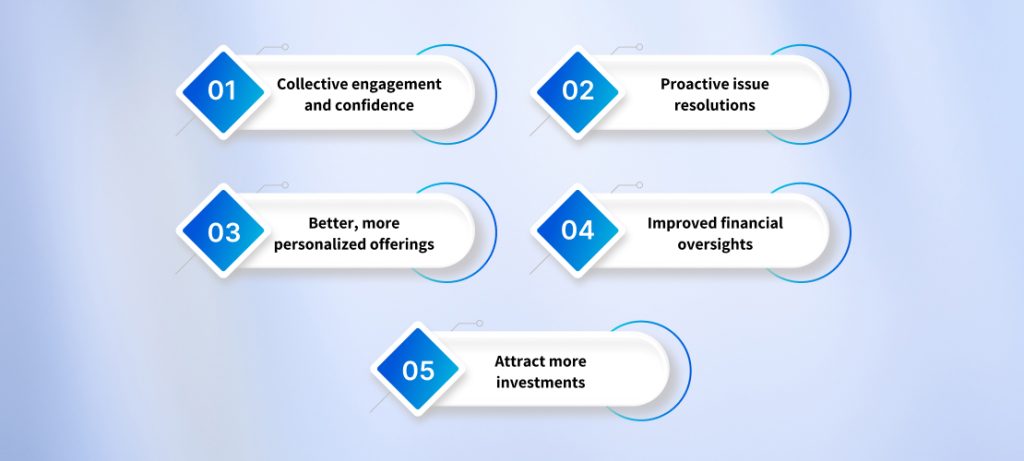

Collective engagement and confidence.

When using AI in development, not everyone understands how it is going to work out and what its results will be. There is less transparency in the decisions taken.

This can be avoided by developing explainable AI as it can explain the logic behind decision-making.

Such a move will help the teams working on the project to be more involved and in control.

Proactive issue resolutions.

It is common to encounter issues while web application development and solutions. However, with explainable AI, these issues can be easily understood by the team.

This leads to faster solutions before it can escalate to graver issues.

Better, more personalized offerings.

Although businesses now use AI in development and other marketing endeavors, they don’t always understand the why behind AI’s decisions.

With the help of explainable AI, businesses can understand what triggers AI to make certain decisions.

Based on this understanding, they can control the outcome even more.

Improved financial oversights.

AI-driven models are largely used in the financial space. We are forced to rely on the results given by the AI, even if we do not know if the model predicts the results accurately.

With explainable AI in development, businesses can understand the rationale behind the outcomes. Based on these insights, the model can be improved.

Attract more investments.

Ever since AI made its splash in the world, businesses have been running to develop AI models. However, investors have become more sensitive towards the privacy and ethical issues of using artificial intelligence in development.

Hence, relying on explainable AI in development can help businesses bring in more investments as investors know you are going the right way.

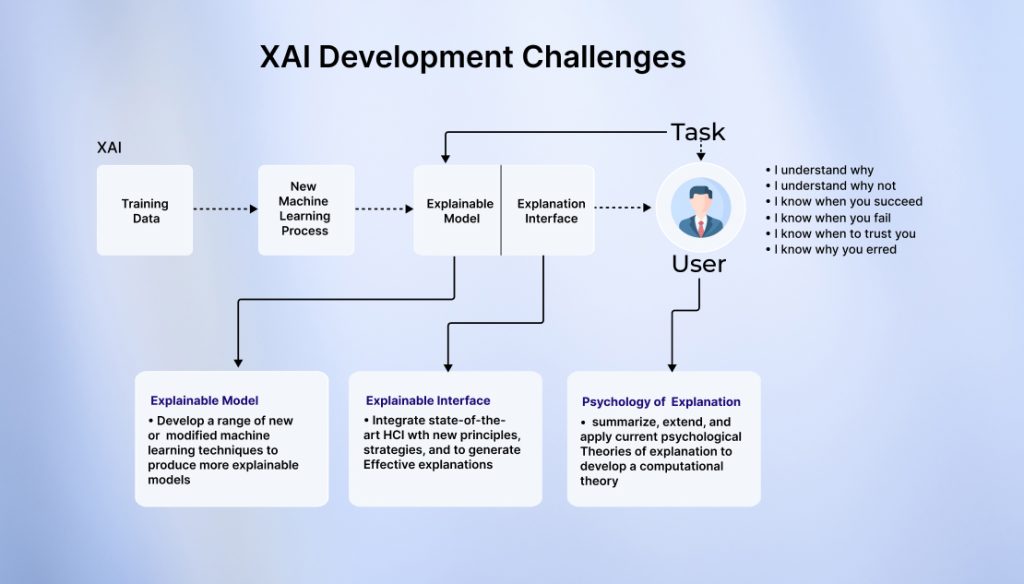

Explainable AI Model: A Blueprint

Developing an explainable AI model is more than just coding. It is about a complex process that includes the following:

- Strategic planning

- Continuous and rigorous testing

- Iterative refinement

All these work in a loop to deliver the best AI results that are transparent and more controlled.

Here is the step-by-step guide you can use to develop it.

Step #1: Identify the problem domain.

This is the first step in developing an explainable AI. Here, we understand the problem and the people who are involved in it.

We ask the following questions to drive more insights into it:

- What are users expecting?

- What answers will AI deliver?

- What are the impacts of the answers given by the AI?

Step #2: Choose the right data set.

Without the right data set, it is impossible to develop an AI model. Hence, it is imperative to choose the right data set that’s accurate and high-quality.

At AddWeb, when we develop explainable AI, we ensure the use of clean, relevant data that is free from all types of biases. And this helps us ensure top-notch AI development.

Step #3: Choose the right AI model.

There are different types of models that can be used to create AI models. Some models are more complex than others. Models like deep neural models are hard to interpret. However, others, such as linear regression or decision trees, are easier to interpret.

To ensure efficiency, we strike a balance during development.

Step #4: Iterative testing

At AddWeb, we carry out adequate and consistent testing of the AI model using various data sets to ensure the model’s performance and accuracy.

We also collect feedback and insights from these tests, which are used to improve the model further.

Step #5: Using explainability tools.

Using tools such as SHAP or LIME, we break down model predictions. This provides us more insights into what feature is affected by what decision.

This enables us to make the model even more accurate and efficient.

Step #6: Documentation

This is a crucial element of explainable AI development as it helps verify, validate, and deploy the models accurately and timely.

As a result, everyone, from the users to all developers, understands how the AI performs and the decisions it makes.

Related Article: How a UI Design Agency Can Use ChatGPT for Design

How Can We Help You in Developing Explainable AI Models?

As a pioneer in the field of technology, AddWeb Solution has developed AI products and services for our clients from diverse parts of the world.

We have the experience and expertise to help you with the same as well.

Here are a few advantages of working with us:

- AddWeb Solution has been in the industry for more than a decade. Hence, you can leverage our expertise and experience, which spans over a decade.

- At AddWeb, whether you are looking for help with AI in development or any other service, we deliver cost-effective services.

- Our team uses the latest technologies, such as artificial intelligence, in development to make your products and solutions impeccable.

- We understand that every business is unique, with its own goals and needs. As such, we offer custom AI development solutions to everyone.

- At AddWeb Solution, we hold ourselves to the highest standards possible when it comes to AI development. Hence, you don’t need to worry about legal and security compliances when you work with us.

Conclusion

There is no doubt that artificial intelligence is here to stay. However, we need to use AI in development in ways that are ethical and compliant with the social norms of the world. Only that will help us create a better world with the help of AI. Doing that is more than just developing an AI model, but creating AI-driven solutions with businesses that understand their social responsibility. This is where AddWeb Solution can help you.

Thanks to our expertise in the field of technology for over a decade, we can help you with the development of explainable AI. Our client service team is ready to talk to you about your requirements. Speak to us.